Moral uncertainty is uncertainty over the definition of good. For example, you might broadly accept utilitarianism, but still have some credence in deontological principles occasionally being more right.

Moral uncertainty is different from epistemic uncertainty (uncertainty about our knowledge, its sources, and uncertainty over our degree of uncertainty about these things). In practice these often mix – uncertainty over an action can easily involve both moral and epistemic uncertainty – but since is-ought confusions are a common trap in any discussion, it is good to keep these ideas firmly separate.

Dealing with moral uncertainty

Thinking about moral uncertainty quickly gets us into deep philosophical waters.

How do we decide which action to take? One approach is called “My Favourite Theory” (MFT), which is to act entirely in accordance to the moral theory you think is most likely to be correct. There are a number of counterarguments, many of which involve around problems of how we draw boundaries between theories: if you have 0.1 credence in each of 8 consequentialist theories and 0.2 credence in a deontological theory, should you really be a strict deontologist? (More fundamentally: say we have some credence in a family of moral systems with a continuous range of variants – say, differing by arbitrarily small differences in the weights assigned to various forms of happiness – does MFT require we reject this family of theories in favour of ones that vary only discretely, since in the former case the probability of a particular variant being correct is infinitesimal?). For a defence of MFT, see this paper.

If we reject MFT, when making decisions we have to somehow make comparisons between the recommendations of different moral systems. Some regard this as non-sensical; others write theses on how to do it (some of the same ground is covered in a much shorter space here; this paper also discusses the same concerns with MFT that I mentioned in the last paragraph, and problems with switching to “My Favourite Option” – acting according to the option that is most likely to be correct, summed over all moral theories you have credence in).

Another less specific idea is the parliamentary model. Imagine that all moral theories you have some credence in send delegates to a parliament, who can then negotiate, bargain, and vote their way to a conclusion. We can imagine delegates for a low-credence theory generally being overruled, but, on the issues most important to that theory, being able to bargain their way to changing the result.

(In a nice touch of subtlety, the authors take care to specify that though the parliament acts according to a typical 50%-to-pass principle, the delegates act as if they believe that the percent of votes for an action is the probability that it will happen, removing the perverse incentives generated by an arbitrary threshold.)

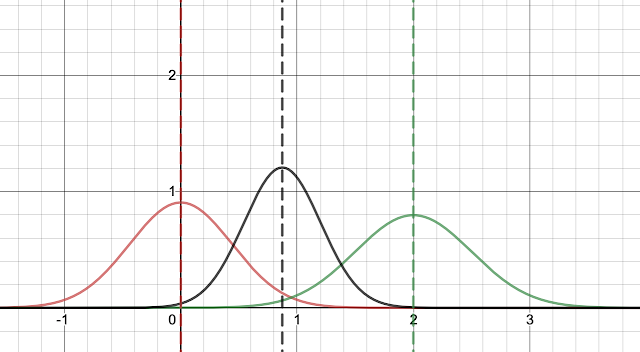

As an example of other sorts of meta-ethical considerations, Robin Hanson compares the process of fitting a moral theory to our moral intuitions to fitting a curve (the theory) to a set of data points (our moral intuitions). He argues that there’s enough uncertainty over these intuitions that we should take heed of a basic principle of curve-fitting: keep it simple, or otherwise you will overfit, and your curve will veer off in one direction or another when you try to extrapolate.

Mixed moral and epistemic uncertainty

Cause X

We are probably committing a moral atrocity without being aware of it.

This is argued here. The first argument is that past societies have been unaware of serious moral problems and we don’t have strong enough reasons to believe ourselves exempt from this rule. The second is that there are many sources of potential moral catastrophe – there are very many ways of being wrong about ethics or being wrong about key facts – so though we can’t point to any specific likely failure mode with huge consequences, the probability that at least one exists isn’t low.

In addition to an ongoing moral catastrophe, it could be that we are overlooking an opportunity to achieve a lot of good for cheap. In either case there would be a cause, dubbed Cause X, which would be a completely unknown but extremely important way of improving the world.

(In either case, the cause would likely involve both moral and epistemic failure: we’ve both failed to think carefully enough about ethics to see what it implies, and failed to spot important facts about the world.)

“Overlooked moral problem” immediately invites everyone to imagine their pet cause. That is not what Cause X is about. Imagine a world where every cause you support triumphed. What would still be wrong about this world? Some starting points for answering this are presented here.

If you say “nothing”, consider MacAskill’s anecdote in the previous link: Aristotle was smart and spent his life thinking about ethics, but still thought slavery made sense.

Types of epistemic uncertainty

Flawed brains

A basic cause for uncertainty is that human brains make mistakes. Especially important are biases, which consistently make our thinking wrong in the same way. This is a big and important topic; the classic book is Kahneman’s Thinking, Fast and Slow, but if you prefer sprawling and arcane chains of blog posts, you’ll find plenty here. I will only briefly mention some examples.

The most important bias to avoid when thinking about EA may be scope neglect. In short, people don’t automatically multiply. It is the image of a starving child that counts in your brain, and your brain gives this image the same weight whether the number you see on the page has three zeros or six after it. Trying to reason about any big problem without being very mindful of scope neglect is like trying to captain a ship that has no bottom: you will sink before you move anywhere.

Many biases are difficult to counter, but occasionally someone thinks of a clever trick. Status quo bias is a preference for keeping things as they are. It can often be spotted through the reversal test. For example, say you argue that we shouldn’t lengthen human lifespans further. Ask yourself: should we then decrease life expectancy? If you think that we should have neither more nor less of something, you should also have a good reason for why it just so happens that we have an optimum amount already. What are the chances that the best possible lifespan for humans also happens to be the highest one that present technology can achieve?

Crucial considerations

A crucial consideration is something that flips (or otherwise radically changes) the value of achieving a general goal.

For example, imagine your goal is to end raising cows for meat, because you want to prevent suffering. Now say there’s a fancy new brain-scanner that lets you determine that even though the cow ends up getting chucked into a meat grinder, on average the cow’s happiness is above the threshold for when non-existence is preferable to existence (assume this is a well-defined concept in your moral system). Your morals are the same as before, but now they’re telling you to raise more cows for meat.

An example of a chain of crucial considerations is whether or not we should develop some breakthrough but potentially dangerous technology, like AI or synthetic biology. We might think that the economic and personal benefits make it worth the expense, but a potential crucial consideration is the danger of accidents or misuse. There might be another crucial consideration that it’s better to have the technology developed internationally and in the open, rather than have advances made by rogue states.

There are probably many crucial considerations that are either unknown or unacknowledged, especially in areas that we haven’t thought about for very long.

Cluelessness

The idea of cluelessness is that we are extremely uncertain about the impact of every action. For example, making a car stop as you cross the street might affect a conception later that day, and might make the difference between the birth of a future Gandhi or Hitler later on. (Note that many non-consequentialist moral systems seem even more prone to cluelessness worries – William MacAskill points this out in this paper, and argues for it more informally here.)

I’m not sure I fully understand the concerns. I’m especially confused about what the practical consequences of cluelessness should be on our decision-making. Even if we’re mostly clueless about the consequences of our actions, we should base them on the small amount of information we do have. However, at the very least it’s worth keeping in mind just how big uncertainty over consequences can be, and there are a bunch of philosophy paper topics here.

For more on cluelessness, see for example:

- Simplifying Cluelessness (an argument that cluelessness is an important and real consideration)

- an in-depth look at different forms of cluelessness

- the author of the previous paper discussing related ideas in a podcast (a transcript is available).

Reality is underpowered

Imagine we resolve all of our uncertainties over moral philosophy, iron out the philosophical questions posed by cluelessness, confidently identify Cause X, avoid biases, find all crucial considerations, and all that remains is the relatively down-to-earth work of figuring out which interventions are most effective. You might think this is simple: run a bunch of randomised controlled trials (RCTs) on different interventions, publish the papers, and maybe wait for a meta-analysis to combine the results of all relevant papers before concluding that the matter is solved.

Unfortunately, it’s often the case that reality is underpowered (in the statistical sense): we can’t run the experiments or collect the data that we’d need to answer our questions.

To take an extreme example, there are many different factors that affect a country’s development. To really settle the issue, we might make groups of, say, a dozen countries each, give them different amounts of the development factors (holding everything else fairly constant), watch them develop over 100 years, run a statistical analysis of the outcomes, and then draw conclusions about how much the factors matter. But try finding hundreds of identical countries with persuadable national leaders (and at least one country must have a science ethics board that lets this study go forwards).

To make a metaphor with a different sort of power: the answers to our questions (on what effects are the most important in driving some phenomenon, or which intervention is the most effective) exist, sharp and clear, but the telescopes with which we try to see them aren’t good enough. The best we can do is interpret the smudges we do see, inferring as much as we can without the brute force of an RCT.

This is an obvious point, but an important one to keep in mind to temper the rush to say we can answer everything if only we run the right study.

Conclusions?

All this uncertainty might seem to imply two conclusions. I support one of them but not the other.

The first conclusion is that the goal of doing good is complicated and difficult (as is the subgoal of having accurate beliefs about the world). This is true, and important to remember. It is tempting to forget analysis and fall back on feelings of righteousness, or to switch to easier questions like “what feels right?” or “what does society say is right?”

The second conclusion is that this uncertainty means we should try less. This is wrong. Uncertainties may rightly redirect efforts towards more research, and reducing key uncertainties is probably one of the best things we can do, but there’s no reason why they should make us reduce our efforts.

Uncertainty and confusion are properties of minds, not reality; they exist on the map, not the territory. To every well-formed question there is an answer. We need only find it.